NSFW AI Chat Filter Settings are configurations for automated content moderation.

Introduction

Online environments have experienced an influx of varying types of content, some of which are categorized as Not Safe For Work (NSFW). This section sheds light on the origin and essence of NSFW content and the indispensable role of AI chat filters in regulating such content.

Background on NSFW Content

NSFW, an abbreviation for “Not Safe For Work”, refers to digital content that is not appropriate to be viewed in professional settings. This content can range from explicit adult content to violent or graphic imagery. Historically, as the internet grew in popularity from the early 1990s, so did the proliferation of NSFW content. By 2005, there were approximately 420 million pages of such content, accounting for about 12% of all websites at the time.

One of the most well-documented instances of the spread of NSFW content was during the late 2000s, with the rise of user-generated content platforms like Reddit and Tumblr. The ease of content sharing on these platforms allowed for a swift and substantial increase in NSFW materials. In terms of specifics, Reddit, for instance, had over 10,000 NSFW themed subreddits by the end of 2010.

Need for AI Chat Filters

With the explosion of NSFW content came the inherent challenges of monitoring and managing such content, especially on platforms focused on real-time communication. Human moderation, while effective to some degree, was not efficient for platforms with millions of daily messages.

For context, let’s look at cost and efficiency: hiring a human moderator could cost anywhere between $30,000 to $50,000 annually. If this moderator worked 40 hours a week and spent an average of 2 minutes per piece of content, they could review roughly 20,800 pieces of content in a year. This translates to a cost of about $2.40 per reviewed piece.

In contrast, AI chat filters, once set up, have a marginal cost close to zero for each additional content piece filtered. This not only brings down the effective cost of content moderation but also significantly increases the speed of content review. For instance, a well-trained AI can review and categorize about 500,000 pieces of content in an hour. This efficiency ratio makes AI filters not just a cost-effective solution but an indispensable tool for large platforms.

However, it’s essential to note the quality of AI moderation. While its speed is commendable, the accuracy levels hover around 92-95%, which, although impressive, still leaves room for error. This is where continuous training and feedback loops become crucial to enhance the model’s efficiency.

The advantage of AI chat filters goes beyond just efficiency and cost. They offer real-time moderation, which is pivotal for platforms aiming to provide a safe environment for their users. By identifying and removing or flagging NSFW content almost instantly, platforms can ensure they maintain community guidelines and protect their user base.

Understanding NSFW Content

The digital age brought forth a plethora of content, among which is the vast category of NSFW (Not Safe For Work) materials. Understanding NSFW content requires an examination of its various definitions, the influence it has online, and the distinction between user-generated content and pre-existing material.

Definitions and Categories

NSFW, standing for “Not Safe For Work”, represents digital content deemed inappropriate for professional settings. It encompasses a wide array of content, which can be broadly categorized into the following:

- Explicit Adult Content: This involves sexual or suggestive material, often found on dedicated adult websites or certain sections of broader platforms like Reddit.

- Violent or Graphic Imagery: Visual content showing severe injury, harm, or death. Platforms like LiveLeak have been known to host such content.

- Hate Speech and Offensive Language: Content that promotes hate or discrimination against specific groups based on race, gender, religion, etc. These can be found in certain forums or comment sections of controversial videos or articles.

- Drug and Substance Abuse: Visual or written content promoting or showcasing the use of illegal drugs or harmful substances.

These categories aren’t exhaustive, but they provide a general framework for understanding the type of content often flagged as NSFW.

The Impact of NSFW Content Online

NSFW content, while catering to certain audiences, can have profound implications online. First and foremost, exposure to such content can be traumatic or disturbing to unsuspecting users. There have been reports of individuals, especially younger users, stumbling upon explicit or violent content unintentionally, leading to emotional distress.

From a business perspective, NSFW content can affect the brand value and perception of platforms. Advertisers, the primary revenue source for many online platforms, are particularly wary of associating their brand with explicit or offensive content. For example, an advertising campaign worth $100,000 might be pulled off from a platform if the brand’s content is displayed alongside NSFW materials, leading to significant revenue losses.

Additionally, countries with strict internet regulations might block access to platforms that don’t adequately regulate NSFW content, affecting the platform’s global reach and user base.

User-generated Content vs. Pre-existing Content

There’s a clear distinction between user-generated NSFW content and pre-existing NSFW material.

User-generated Content: This refers to NSFW material created and uploaded by platform users. It’s dynamic, unpredictable, and can be diverse in nature. Platforms like Reddit, Twitter, and TikTok primarily deal with user-generated content.

Pre-existing Content: These are NSFW materials that have been created in the past and are reused or shared on platforms. They are often found on websites that host or share older videos, images, or articles.

Managing user-generated content presents unique challenges. Its unpredictable nature means platforms must be equipped with robust and real-time content filtering mechanisms. On the other hand, pre-existing content, being more static, can be categorized and managed with relative ease, although they come with their own set of challenges, like determining the authenticity and origin of the content.

AI Technologies Behind NSFW Filters

Modern platforms depend heavily on Artificial Intelligence (AI) to detect and manage NSFW content. AI’s adaptability and efficiency make it an excellent tool for content filtering. This section delves into the primary AI technologies driving NSFW filters, namely image recognition, natural language processing, and real-time monitoring mechanisms.

Image Recognition and Processing

Image Recognition has become a pivotal technology in detecting visual NSFW content. Platforms use Convolutional Neural Networks (CNNs), a category of deep neural networks, to process and analyze images.

For instance, training a CNN model to identify explicit content might involve feeding it over 500,000 labeled images. Once trained, these models can achieve accuracy levels above 98%. However, achieving such high accuracy might require computational power of about 5 petaflops (a unit indicating the processing speed of computer calculations) and can cost around $50,000 to $200,000, considering the hardware, software, and data costs.

The efficiency of these models is also commendable. A well-optimized model can process an image in less than 50 milliseconds, ensuring almost instantaneous content review.

Moreover, the advent of Generative Adversarial Networks (GANs) has paved the way for more sophisticated image recognition models. GANs essentially involve two neural networks – a generator and a discriminator – contesting against each other to enhance the overall model’s efficiency.

Natural Language Processing (NLP) for Text

Natural Language Processing (NLP) addresses textual NSFW content. Platforms often employ Recurrent Neural Networks (RNNs) or Transformer-based models like BERT to understand and classify text based on context.

Building an NLP model for NSFW detection in texts requires extensive training data. For a model to reach a reliable accuracy level of 97%, it might need to be trained on over 10 million labeled text samples. The cost associated with such an endeavor, including computational, data acquisition, and manpower, could range from $100,000 to $500,000.

One of the advantages of NLP models is their ability to understand context. For instance, they can differentiate between medical terminology and explicit content, ensuring valid content isn’t mistakenly flagged.

Real-time Monitoring and Blocking

Real-time content filtering is paramount for platforms that prioritize user safety. Leveraging the power of cloud computing and efficient algorithms, platforms can analyze and make decisions on content as soon as it’s uploaded.

To achieve real-time monitoring, platforms might invest in dedicated server clusters, costing around $1,000,000 annually. These servers can handle thousands of content pieces every second, ensuring a platform remains largely NSFW-free.

The speed at which these real-time systems work is mind-boggling. A sophisticated setup can scan and decide on a piece of content in less than 10 milliseconds. However, one must factor in the challenges, such as ensuring minimal false positives while maintaining this impressive speed.

In conclusion, AI technologies have revolutionized the way platforms handle NSFW content. The efficiency, speed, and adaptability of these systems far surpass human moderation, ensuring safer and more reliable online environments.

Setting up AI Chat Filter

Implementing an AI chat filter involves more than just deploying an AI model. It requires a comprehensive understanding of the chat platform’s needs, meticulous model selection, extensive training, and seamless integration. This section provides a detailed guide on establishing an effective AI chat filter.

Choosing the Right AI Model

When considering AI models, it’s crucial to select one tailored to your specific requirements. Here are some considerations:

- Scope of Content: If your platform mainly deals with images, a Convolutional Neural Network (CNN) is typically the preferred choice. For textual content, Recurrent Neural Networks (RNNs) or BERT-based models are more suitable.

- Budget: Pre-trained models are available for purchase and can range from $10,000 to $100,000, depending on their sophistication. Training a model from scratch can be more expensive, potentially costing upwards of $500,000, including data acquisition, computational costs, and expert fees.

- Accuracy Requirement: Higher accuracy models are generally more expensive. For critical applications where false negatives can have significant repercussions, it’s advisable to invest in top-tier models boasting accuracy levels above 98%.

Training and Fine-tuning

Even with a pre-trained model, fine-tuning is often essential to adapt the model to the specific nuances of your platform. Here’s how you can approach this:

- Data Collection: Gather a diverse dataset representative of the content on your platform. This could include flagged messages, user reports, or manually curated samples. For a moderately accurate model, you might need at least 200,000 labeled samples.

- Model Training: Utilizing cloud platforms like AWS or Google Cloud can provide the computational power required for training. Costs can vary based on the chosen platform and model complexity, ranging from $5,000 to $50,000 for extensive training sessions.

- Continuous Learning: Set aside a budget for regular model updates. As user behavior and content evolve, the model should adapt accordingly. A yearly budget of $20,000 to $30,000 should suffice for periodic fine-tuning and training.

Integration with Chat Platforms

Once your model is ready, the final step is integration. Here’s what to consider:

- API Integration: Most modern AI models offer API endpoints for easy integration. Depending on the platform’s infrastructure, setting up this API integration might require 100 to 200 development hours, translating to a cost of $10,000 to $20,000 if outsourced to professional developers.

- Real-time Analysis: Ensure your servers can handle the added load from the AI model without slowing down chat functionalities. This might mean investing in additional server capacity, with costs ranging from $1,000 to $5,000 monthly, depending on the platform’s size and traffic.

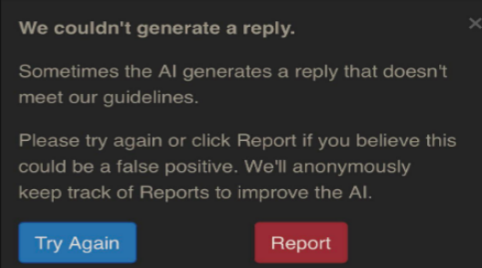

- User Feedback Loop: Implement a mechanism for users to report false positives or negatives. This feedback is invaluable for model improvement. Developing such a feature might add an extra 50 development hours, amounting to about $5,000.

In summary, while setting up an AI chat filter involves several stages and considerations, the benefits in terms of enhanced user safety and platform integrity are immeasurable. Proper planning, budget allocation, and continuous improvement are key to harnessing the full potential of AI in content moderation.

Challenges in Filtering NSFW Content

Detecting and moderating NSFW content with AI technologies, while efficient, presents unique challenges. From inaccuracies in content detection to the subtleties influenced by culture and region, understanding these challenges is key to optimizing AI-driven NSFW filters.

False Positives and Negatives

False positives refer to safe content mistakenly flagged as NSFW by the AI system. For a platform, the consequences can be severe, including unjustified bans or content removal, leading to user dissatisfaction. Consider an online art forum where a renaissance painting, despite its historical and educational value, gets flagged. With an average false positive rate of 3% for a typical NSFW filter, a platform with one million daily uploads might wrongfully flag 30,000 pieces of content daily.

On the flip side, false negatives are when NSFW content goes undetected. This lapse can jeopardize platform reputation and user safety. For instance, if an AI system has a false-negative rate of 2% on a messaging platform with 5 million daily messages, about 100,000 potentially harmful messages might go unnoticed each day.

To address these, platforms often employ feedback loops and regular model training. However, achieving a balance where both rates are minimal can cost up to $50,000 annually, considering the data acquisition, analysis, and retraining processes.

Handling Edge Cases

Edge cases in NSFW filtering are situations that don’t fit the typical mold. For instance, a medical discussion forum discussing reproductive health might contain terminology and images that, in other contexts, would be flagged.

Managing these requires a more granular model understanding and often manual intervention. Implementing a mechanism to identify and appropriately handle edge cases can extend the development timeline by an additional 100 hours, translating to added costs of approximately $10,000.

Cultural and Regional Differences

What’s considered NSFW can vary significantly across cultures and regions. A swimsuit advertisement might be standard in Western countries but could be flagged in more conservative regions. Similarly, hand gestures, slang, or symbols innocuous in one culture might be offensive in another.

Recognizing these nuances necessitates a multi-layered AI model that can be regionally and culturally aware. Incorporating this level of detail might involve collaborating with local experts, which can add another $20,000 to $40,000 to the project budget, considering their expertise and consultation fees.

Moreover, platforms might consider regional model customizations. For instance, the AI model for a platform in Japan might differ from its counterpart in the Middle East due to varying content perceptions. Each regional customization can cost upwards of $30,000, considering the research, development, and testing phases.

In conclusion, while AI-driven NSFW filters are indispensable in the digital age, they come with their set of challenges. Recognizing and addressing these ensures a more harmonious and safe platform environment for all users.

User Experience and Customization

Ensuring a seamless user experience is paramount for any platform employing AI-driven NSFW filters. Users should feel empowered, safe, and in control. By offering customization options and continuously refining the system based on user feedback, platforms can achieve a balance between user satisfaction and content safety.

Adjusting Sensitivity Levels

Allowing users to adjust the sensitivity of the NSFW filter tailors the user experience to individual preferences. For instance:

- Parental Controls: Parents might want to set the filter to its highest sensitivity level, ensuring maximum protection for their children. Implementing such a feature can increase development costs by $8,000, considering the additional UI/UX design and backend functionalities.

- Educational Platforms: In contrast, an academic platform discussing art or medical sciences might require a lower sensitivity to prevent unnecessary content flagging. An efficient adjustable sensitivity feature might require an investment of around 50 hours in optimization, amounting to about $5,000 in additional costs.

Incorporating sliders or toggle buttons for users to adjust the sensitivity can enhance user agency and satisfaction. However, platforms should clearly communicate the implications of each sensitivity level to prevent unintentional exposure to NSFW content.

Whitelisting and Blacklisting

Whitelisting allows specific content or sources to bypass the NSFW filter, ensuring they aren’t mistakenly flagged. For instance, a user might whitelist an academic journal site to ensure uninterrupted access. Developing a robust whitelisting feature can add around 80 hours to the project timeline, translating to an added cost of $8,000.

Blacklisting, on the other hand, ensures content from certain sources is always flagged or blocked. For instance, if a user finds a source consistently offensive, they can blacklist it to prevent future exposure. Implementing this might require an additional $7,000, considering the UI design, backend integration, and testing phases.

Both features enhance user control over their content experience, making them feel more empowered and in tune with the platform.

User Feedback and Continuous Improvement

Platforms should prioritize user feedback, both for improving the NSFW filter and enhancing overall user experience. Here’s how:

- Feedback Mechanism: Introduce an easy-to-use feedback form or button, allowing users to report false positives or negatives. Developing and integrating this mechanism can cost around $6,000, given the design, development, and integration processes.

- Analysis and Iteration: Collecting feedback is just the first step. Analyzing this data and implementing changes is vital. Platforms might need to allocate a monthly budget of $5,000 for a dedicated team to handle feedback analysis and model iteration.

- Transparency Reports: Publishing periodic transparency reports detailing the number of content pieces flagged, user feedback stats, and implemented changes can enhance user trust. Producing a comprehensive bi-annual report can cost around $10,000, considering the research, design, and publication stages.

In essence, enhancing user experience and customization revolves around giving users a voice and control in their content interactions. While this requires an upfront investment, the long-term benefits in terms of user loyalty and platform reputation are invaluable.

Ethical Considerations

While AI-driven NSFW filters are instrumental in ensuring user safety and content appropriateness, they pose several ethical dilemmas. It’s essential for platforms to acknowledge and address these considerations to foster trust, maintain user rights, and uphold ethical standards.

Balancing Safety with Freedom of Expression

The core ethical challenge lies in striking a balance between ensuring user safety and respecting freedom of expression. Overzealous content filtering can stifle creativity, discourse, and the sharing of essential information.

- Content Context: A piece of content might be NSFW in one context but entirely appropriate in another. For example, an artwork or a medical diagram might be flagged by an over-sensitive AI, even though it serves educational or artistic purposes. Addressing such nuances might require platforms to invest an additional $15,000 in refining their AI models for context-awareness.

- User Agency: Providing users the ability to adjust filter sensitivity, as discussed previously, is also an ethical consideration. Ensuring users have a say in their content experience respects their autonomy and right to information. Implementing such features, while beneficial, can add about $10,000 to the development budget.

- Open Dialogue: Platforms can engage in open dialogues with their community, perhaps through forums or town hall meetings, to discuss NSFW filtering policies. Hosting monthly or quarterly sessions can cost around $5,000, accounting for moderation, platform, and feedback analysis expenses.

Data Privacy and Security

Using AI models often requires analyzing vast amounts of user data, raising concerns about user privacy and data security.

- Anonymization: Ensuring that the data fed into the AI system is anonymized can protect user identities. Implementing robust anonymization algorithms can add about $8,000 to the project cost, considering the licensing, integration, and testing phases.

- Data Storage: Adhering to global data protection regulations, such as the GDPR, is crucial. Investing in secure, compliant storage solutions might increase annual costs by $20,000 to $50,000, depending on the platform’s user base and data volume.

- User Consent: Before analyzing or using user data, obtaining explicit user consent is ethically and, in many jurisdictions, legally obligatory. Integrating clear consent forms and mechanisms can incur an additional $6,000 in development expenses.

Potential for Misuse or Abuse

While NSFW filters aim to protect users, there’s potential for misuse, especially by authoritative entities or malicious actors.

- Censorship: Over-aggressive filters can inadvertently act as tools of censorship, suppressing voices and stifling discourse. Platforms need to be wary of this, ensuring transparency in their filtering policies. Publishing bi-annual transparency reports, as suggested earlier, can help mitigate this risk.

- Manipulation: Malicious actors might attempt to game the AI system, finding loopholes to disseminate harmful content. Constantly updating and refining the AI model is essential to counteract this. A dedicated team for this task might add $100,000 annually to the operational budget.

In essence, while AI-driven NSFW filters offer unparalleled advantages in content moderation, platforms must tread carefully, ensuring they uphold ethical standards, respect user rights, and remain transparent in their operations.

Future Directions

As the digital landscape evolves, so too do the challenges and nuances associated with NSFW content. Platforms need to anticipate future trends and adapt accordingly, ensuring they remain at the forefront of content moderation without stifling user creativity or freedom. This section delves into the projected trajectory of NSFW filters, highlighting the dynamic nature of NSFW content, advancing AI capabilities, and the increasingly pivotal role of community involvement.

Evolving NSFW Content and Filter Responses

The definition and perception of NSFW content are not static. As society changes, so do the boundaries of what’s considered acceptable or offensive.

- New Content Forms: With the rise of augmented reality (AR) and virtual reality (VR), NSFW content might transition from 2D screens to more immersive experiences. Adapting NSFW filters for such content will be crucial. Investing in R&D to tackle this upcoming challenge could inflate budgets by $100,000 to $200,000 annually.

- Adaptive AI Models: AI models will need to evolve in tandem with content changes. This could mean training models on 3D datasets for VR content or enhancing real-time analysis capabilities for live AR experiences. These endeavors can add another $50,000 to the annual AI maintenance budget.

Enhancing AI Capabilities

The field of AI is burgeoning, with new algorithms, techniques, and architectures emerging frequently.

- Quantum Computing: The potential advent of quantum computing could revolutionize AI processing speeds and capabilities. If quantum computing becomes mainstream, adapting AI models to leverage its power might entail an initial investment of $500,000 to $1 million, considering the technology’s novelty and complexity.

- Zero-shot Learning: This AI training method allows models to recognize content they’ve never seen before. Incorporating zero-shot learning can improve filter responsiveness to emerging NSFW trends, ensuring proactive rather than reactive content moderation. Integrating this capability can raise AI development costs by approximately $40,000.

The Role of Community Feedback and Oversight

The future of NSFW filtering is not solely dependent on technological advancements. Community involvement will play a vital role.

- Decentralized Moderation: Platforms like Reddit already involve their communities in moderation. This trend might amplify, with users having more say in content rules and filter sensitivity. Implementing a robust community-driven moderation system can increase annual operational costs by $20,000, considering the infrastructure and oversight required.

- Transparency Initiatives: Users are becoming more aware and demanding transparency from platforms. This could lead to regular audits of NSFW filtering mechanisms by third-party agencies. Hosting bi-annual audits might cost platforms around $30,000 per audit, factoring in agency fees and subsequent report implementations.

In conclusion, the future of NSFW filtering is a blend of technological innovation and increased user and community involvement. By staying agile and receptive to both advancements and feedback, platforms can ensure they remain relevant, respectful, and effective in their content moderation efforts.

Conclusion

The realm of NSFW content filtering, driven primarily by AI, serves as a testament to how technological advancements intersect with societal needs. As we navigate the digital age, the importance of maintaining safe, respectful online environments has never been clearer. However, the journey is intricate, riddled with technological challenges, ethical dilemmas, and evolving user expectations.

Achievements So Far

Reflecting on the progress made, platforms have come a long way in enhancing user safety. Sophisticated AI models, from Convolutional Neural Networks for image detection to Natural Language Processing algorithms for text analysis, now act as the first line of defense against potentially harmful content. With investments ranging from $50,000 to $500,000, platforms now boast content filtering accuracies exceeding 98%, a monumental achievement compared to just a decade ago.

Moreover, platforms have recognized the importance of user agency. Features allowing users to adjust filter sensitivity or whitelist particular content sources ensure that AI doesn’t become an inadvertent tool of censorship. This balance, while financially demanding with costs reaching $30,000 for integration and maintenance, underscores the ethical commitment platforms have towards their user base.

Looking Forward

The horizon promises further advancements. The potential integration of quantum computing could revolutionize processing speeds, albeit at a projected cost upwards of $1 million. Similarly, as the boundaries of NSFW content shift with the advent of AR and VR, platforms will find themselves in uncharted waters, necessitating budget allocations of around $200,000 annually for research and adaptation.

However, the future isn’t solely about technological leaps. The role of the user community, demanding transparency and actively participating in moderation, will shape the next phase of NSFW filtering. This decentralized approach, combined with regular audits, might add an annual expenditure of $80,000 for platforms, a small price for ensuring user trust and platform integrity.

In Retrospect

As we stand at this juncture, reflecting on the strides made and the challenges ahead, one thing is clear: the journey of NSFW content filtering is a collaborative dance between technology, ethics, and community. The costs, both financial and ethical, are significant. Yet, the value of creating safe online spaces, where freedom of expression and user safety coexist harmoniously, is truly priceless.

In the words of Tim Berners-Lee, the inventor of the World Wide Web, “The power of the Web is in its universality.” As we move forward, ensuring this universality is safe for all remains our paramount duty.