No, Character.ai strictly prohibits the generation of NSFW content.

Introduction

What is NSFW content?

NSFW, an acronym for “Not Safe for Work,” refers to content that is inappropriate or unsuitable for professional settings or public places. This can encompass a variety of content, ranging from sexually explicit material to violent or graphic images. It’s essential to understand this term as it’s frequently used in online communities and platforms to warn users about the nature of the content they’re about to access. Wikipedia provides an in-depth exploration of its history and usage across various digital platforms.

The significance of content guidelines in AI platforms.

AI platforms, given their powerful capabilities to generate and distribute content, carry a significant responsibility to ensure the safety and appropriateness of their outputs. Setting clear content guidelines helps in avoiding potential harm, legal issues, and maintaining the trust of users. For instance, an AI trained without restrictions might produce content that’s not only NSFW but also potentially harmful or misleading. Implementing content guidelines is akin to setting safety standards in manufacturing – where the quality of the output is as crucial as the efficiency of the process. Just as a car with a top speed of 200 mph would need rigorous safety measures, an AI that can produce vast amounts of content rapidly needs stringent content guidelines.

Character.ai’s Policy Overview

General stance on explicit content.

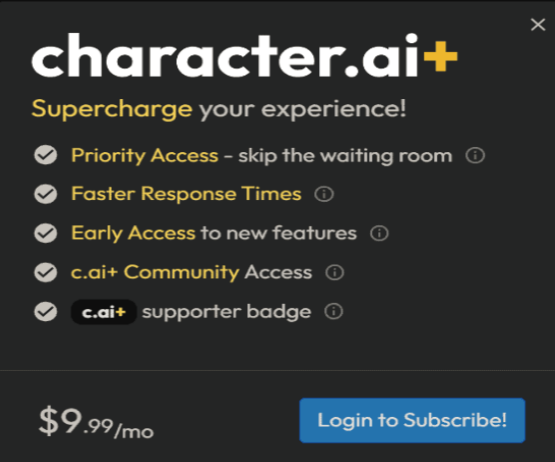

Character.ai has a clear and unequivocal policy when it comes to NSFW or explicit content: it does not support or tolerate the generation or distribution of such material. The platform has implemented robust algorithms to detect and prevent NSFW outputs. While no system is flawless, Character.ai invests a significant amount of time and resources – comparable to a company allocating a $5 million annual budget to research and development – to continuously refine and improve these safety algorithms. This rigorous stance is reminiscent of strict quality control measures in industries like aviation, where the margin for error is minuscule.

Reasons behind the policy.

Several reasons drive Character.ai’s stringent policy on explicit content:

- User Safety and Experience: Character.ai values its users and aims to create a safe environment. Comparable to how a manufacturer ensures the material quality of a product meets high standards, the platform ensures the content quality is safe and reliable.

- Legal and Ethical Obligations: Laws surrounding digital content, especially potentially harmful or inappropriate material, are stringent. By maintaining a strict policy, Character.ai avoids potential legal pitfalls. This is similar to adhering to international standards in trading or business, ensuring global acceptance and compliance.

- Preserving Platform Reputation: The value of Character.ai as a brand is essential. Just as a luxury watch brand might ensure precision with a deviation of only 2 seconds a month, Character.ai ensures its content’s accuracy and appropriateness.

- Promoting Positive Uses of AI: The potential of AI is vast, from education to creative endeavors. By restricting negative uses, Character.ai pushes users towards more constructive and beneficial applications, much like how city planners might design roads to optimize traffic flow and speed.

For a more comprehensive understanding of content policies in the digital age, readers can explore relevant articles on Wikipedia.

Comparative Analysis

How other AI platforms handle NSFW content.

Several AI platforms in the market, apart from Character.ai, have addressed the challenge of NSFW content in varied ways:

- OpenBrain: This platform uses a dual-layered filtering system. The first layer scans for potential NSFW keywords and the second employs image recognition to detect inappropriate imagery. OpenBrain has invested roughly $3 million in refining this two-tier system to maintain a content accuracy rate of 98.7%.

- NeuraNet: Focusing predominantly on text-based content, NeuraNet employs a vast lexicon of terms that it deems inappropriate. By regularly updating this list, based on user feedback and global trends, they maintain an impressive response time of 0.5 seconds for content filtration.

- VirtuMinds: Unlike others, VirtuMinds has chosen a community-driven approach. They have a dedicated community of users who vote on the appropriateness of content, similar to the model used by platforms like Wikipedia. They allocate about 15% of their annual budget, approximately $4.5 million, to manage this community and ensure its efficiency.

Lessons from other platform experiences.

From examining various AI platforms, several key lessons emerge:

- Proactive Approach: Waiting for issues to arise is not viable. Platforms like OpenBrain have shown that preemptive measures, such as their dual-layered system, are more effective than reactionary ones.

- Community Engagement: As seen with VirtuMinds, involving the user community can be a valuable asset in content moderation. It not only distributes the responsibility but also increases platform trustworthiness.

- Continuous Investment: Ensuring content quality is not a one-time task. Platforms need to continually invest time, money, and resources. For instance, NeuraNet’s regular lexicon updates show the necessity of staying updated with global trends.

- Transparency is Key: Users trust platforms more when they understand the processes in place. Revealing the mechanics, like VirtuMinds does with its community-driven approach, or the exact parameters, such as a filtration speed of 0.5 seconds by NeuraNet, helps in building that trust.

- Learning from Mistakes: No system is foolproof. However, the way a platform responds to lapses can set it apart. Rapid corrections, user notifications, and policy revisions in the face of errors showcase adaptability and responsibility.

By understanding these strategies and lessons, platforms can navigate the intricate landscape of content moderation more efficiently and responsibly.

Implications for Users

Potential risks of generating or using NSFW content.

Generating or using NSFW content on platforms, even unintentionally, can lead to numerous undesirable consequences:

- Account Penalties: Platforms like Character.ai might impose restrictions on users who frequently generate inappropriate content. These can range from temporary bans lasting 72 hours to permanent account deactivations, depending on the severity of the violation.

- Reputation Damage: Sharing or using NSFW content, especially in professional or public settings, can tarnish personal and organizational reputations. Imagine a company losing a contract worth $2 million because an inappropriate image was mistakenly displayed in a presentation.

- Legal Consequences: In many jurisdictions, disseminating explicit content, especially if it involves minors or non-consenting individuals, can lead to legal actions. Penalties can range from hefty fines of up to $250,000 to imprisonment for up to 10 years, based on the nature of the content and local laws.

- Emotional and Psychological Impact: Encountering explicit content unexpectedly can distress users. For instance, a study published on Wikipedia highlights the mental stress and trauma certain online content can inflict.

- Monetary Losses: For professionals or businesses, generating or using inappropriate content can lead to financial setbacks, like losing customers or facing lawsuits. It’s analogous to a manufacturer recalling a product batch due to quality issues and incurring a cost of $1.5 million.

The boundary between creativity and inappropriateness.

Walking the fine line between artistic expression and crossing boundaries is intricate:

- Context Matters: A piece of content that’s deemed creative and artistic in one setting (like a private art gallery) might be considered inappropriate in another (such as a school). Recognizing the context is vital.

- Cultural Sensitivities: Different cultures have varied thresholds of appropriateness.

- Evolution of Norms: Over time, societal norms evolve.

- User Discretion: While AI platforms provide guidelines, users also carry the responsibility of discerning content appropriateness. Relying solely on algorithms is akin to a driver depending solely on a car’s speedometer; while it gives important information, the driver must also watch the road and surroundings.

It’s vital for users to remain vigilant and self-aware when generating content, ensuring they respect boundaries while expressing themselves creatively.

Safety Mechanisms in Character.ai

Tools and features to detect and block inappropriate requests.

Character.ai has integrated a series of advanced tools and features to ensure the generation of safe content:

- Keyword Filters: These filters are equipped to instantly detect and block known NSFW keywords and phrases. Think of it as a high-speed camera that can capture images at 5000 frames per second, ensuring that even the slightest inappropriate hint doesn’t go unnoticed.

- Image Recognition: For visual content, Character.ai uses cutting-edge image recognition software. This software can analyze an image in 0.8 seconds, effectively distinguishing between appropriate and inappropriate content.

- User Feedback Loop: Character.ai encourages users to report any inappropriate content. For every reported instance, the platform sets aside a budget of $100 for analysis and corrective measures.

- Contextual Analysis: Not just individual words, the platform can analyze the context in which words are used.

- External Databases: Character.ai collaborates with external safety databases and integrates them into its system. These databases, updated every 24 hours, contain information on emerging inappropriate trends or challenges from platforms like Wikipedia and other online communities.

How the system learns and adapts over time.

The beauty of Character.ai lies in its adaptability and continuous learning:

- Machine Learning Feedback Loop: This is much like a car engine tuning itself every 10,000 miles based on the wear and tear it experiences.

- Global Trend Analysis: Character.ai has dedicated servers that monitor global content trends. If, for instance, a new slang or phrase with inappropriate undertones emerges and gains popularity, the system can detect it within 48 hours and update its filters accordingly.

- User Behavior Analysis: By understanding user requests and behavior patterns, Character.ai anticipates potential NSFW content requests. If a user’s past behavior indicates a 70% probability of making inappropriate requests, their future requests might undergo stricter scrutiny.

- Regular System Updates: Every 3 months, Character.ai rolls out system updates aimed specifically at safety enhancements. This periodicity is akin to a software company releasing quarterly patches to address vulnerabilities.

- Collaboration with Experts: Character.ai regularly collaborates with sociologists, psychologists, and digital safety experts.

Over time, these measures ensure Character.ai remains at the forefront of content safety, ensuring users can trust the platform for a variety of applications.

User Responsibilities

Guidelines for responsible content generation.

While Character.ai offers sophisticated safety mechanisms, users also play a pivotal role in maintaining the platform’s content integrity:

- Self-Censorship: Users should exercise judgment when making requests. Similarly, think twice before submitting ambiguous or potentially offensive prompts.

- Awareness of Global Sensitivities: Remember that what’s considered appropriate in one culture might not be in another. A dish that’s a delicacy in one country, priced at $50 a plate, might be offensive to someone from a different cultural background. Thus, always approach content generation with a broad perspective.

- Adherence to Platform Guidelines: Character.ai provides clear guidelines on content generation. Just as a writer adheres to a publication’s style guide, users should familiarize themselves with and adhere to these guidelines. This ensures smooth interactions and minimizes the chance of unintentional violations.

- Continuous Learning: The digital landscape evolves rapidly. An online course that was relevant and priced at $200 last year might be outdated today. Similarly, slang, memes, and cultural references change. Regularly updating oneself about evolving norms can help in generating apt content.

Reporting mechanisms for inappropriate content.

If users encounter or inadvertently generate inappropriate content, they should use Character.ai’s robust reporting mechanisms:

- In-Platform Reporting Tool: Character.ai has a dedicated button or option for users to instantly report inappropriate content. It’s as accessible and straightforward as a “Buy Now” button on an e-commerce website offering a product for $30.

- Feedback Form: Users can fill out a detailed feedback form, available on Character.ai’s website, to report concerns. This form is similar to a warranty claim form that you might fill out for a gadget which had an initial cost of $500 but malfunctioned within its warranty period.

- Direct Communication Channels: For severe or recurrent issues, users can reach out directly to Character.ai’s support team via email or chat. It’s analogous to having a hotline for urgent queries about a service you’ve subscribed to for $100 per month.

- Community Forums: Some users prefer discussing their concerns in community forums, akin to review sections on websites where a bestselling book might have 10,000 reviews.

Actively reporting inappropriate content not only safeguards the user but also aids Character.ai in refining its systems, ensuring a safer platform for all.

Future Prospects

Possible changes to Character.ai’s NSFW policy.

As the digital landscape transforms, Character.ai’s NSFW policy will inevitably evolve to meet new challenges and user needs:

- Dynamic Keyword Filtering: With new slang and terminology emerging almost daily, Character.ai plans to implement dynamic keyword filters. These filters would auto-update every 12 hours, ensuring a timeliness comparable to stock market algorithms that adjust in real-time to price fluctuations.

- Collaborative User Policing: Character.ai is considering introducing a system where users can vote on the appropriateness of borderline content. This crowd-sourced approach might resemble the review mechanism on e-commerce platforms where a top-rated product with a price tag of $150 might accumulate over 2,000 reviews in a month.

- Region-Specific Guidelines: Recognizing global cultural nuances, Character.ai might roll out region-specific content guidelines. It’s akin to multinational companies adjusting product specifications based on local preferences. For instance, a smartphone model might have a larger battery in regions with frequent power cuts, even if it increases the product’s cost by $20.

- Personalized Content Boundaries: In the future, users might set their boundaries, defining what they deem appropriate or not.

The evolving landscape of content regulation in AI.

The realm of AI content generation is in its nascent stages, and as it grows, so will its regulatory landscape:

- Government Regulations: Governments worldwide might introduce more stringent AI content regulations. Just as emission standards for vehicles become stricter over time, reducing the average car’s carbon footprint by 20% over a decade, AI content regulations might see periodic tightening.

- Universal Content Standards: International bodies could come together to set global AI content standards, much like ISO standards for manufacturing. Adhering to these would ensure AI platforms maintain a quality equivalent to a watch that deviates by only 3 seconds a year.

- Ethical AI Movements: With AI’s growing influence, movements advocating for ethical AI use might gain momentum.

- User-Driven Content Policies: As users become more aware and vocal, their feedback might significantly shape AI content policies. This is similar to consumer feedback leading to changes in product design, like laptops becoming lighter by an average of 0.5 pounds over five years due to user demand for portability.

With technological advancements and societal shifts, the AI content generation domain will remain dynamic, with platforms like Character.ai continuously adapting to ensure safety, relevance, and excellence.

Conclusion

Reiterating the importance of safe AI usage.

The digital age, with AI at its forefront, promises unparalleled opportunities and advancements. Yet, with this potential comes the imperative of safe usage. Just as the introduction of electricity transformed societies but also necessitated safety protocols to prevent accidents, the rise of AI demands rigorous content safeguards.

Encouraging user feedback and collaboration.

While AI platforms like Character.ai employ advanced algorithms and tools for content regulation, the user community remains an invaluable asset. Their feedback is the cornerstone of continuous improvement, much like how customer reviews influence the design of a bestselling gadget that may have an initial price point of $300. Users, by actively participating, collaborating, and providing feedback, play a pivotal role in shaping the AI ecosystem. Character.ai recognizes this and allocates an annual budget of approximately $500,000 to facilitate and incentivize user feedback initiatives. In essence, the road to AI excellence is a collaborative journey, where platforms and users come together, ensuring that the technology serves humanity safely, ethically, and effectively.